Determining the degree of cherry ripeness is a fundamental step toward achieving truly intelligent and efficient harvesting.

Traditionally, this task has relied mainly on the farmer’s trained eye or on partially automated image analysis methods.

In the former case, evaluation is based on long-term experience, observing fruit color, size, and surface brightness.

However, this approach is highly subjective, varies from one individual to another, and is strongly influenced by external factors such as lighting conditions and weather.

Traditional methods and their limits

As a result, efficiency is low, labor costs are high, and the method is poorly suited to the needs of large-scale production.

Traditional image processing techniques have attempted to overcome these limitations through methods such as edge detection and threshold-based segmentation.

Typically, the process involves acquiring cherry images and extracting features such as color, texture, and shape in order to isolate the fruit region.

Nevertheless, in real-world environments characterized by complex backgrounds, uneven illumination, and the presence of shadows, these techniques quickly reach their limits.

Challenges in real-world environments

Variations in lighting can lead to the loss of geometric information, while difficulties in integrating multi-scale features further reduce recognition reliability.

An additional practical issue is that the computational requirements of these algorithms are often incompatible with the processing capabilities of agricultural devices, such as sensors or mobile terminals deployed in the field.

In recent years, the rapid development of deep learning and computer vision has opened new perspectives for the automated detection of cherry ripeness.

In particular, models based on convolutional neural networks (CNNs) and the YOLO (You Only Look Once) family of algorithms have been widely adopted in agricultural applications.

Deep learning approaches

Traditional CNNs, although capable of achieving high accuracy, are computationally complex and resource-intensive, which makes their practical deployment difficult.

Moreover, when processing high-resolution images, they often fail to meet the real-time performance requirements demanded by on-site monitoring scenarios.

YOLO-based models, by contrast, have attracted increasing attention due to their ability to balance speed and accuracy.

Despite these advantages, cherry ripeness detection remains a challenging task for three main reasons: strong environmental interference that hampers the extraction of fruit features; the need to detect small and densely distributed targets, which increases the risk of missed detections and false positives; and the high computational load of existing models, which limits their application on agricultural devices.

The CMD-YOLO model

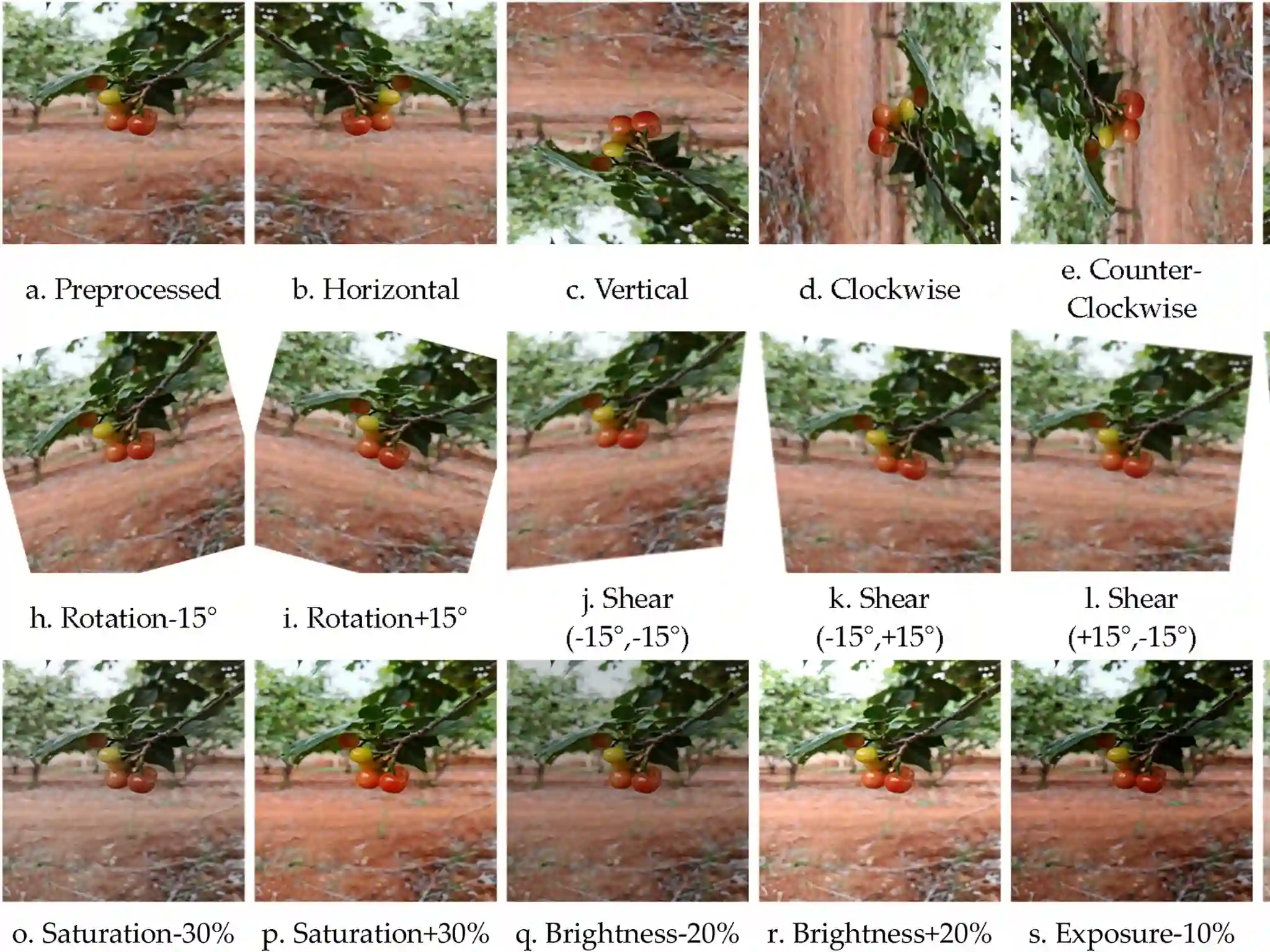

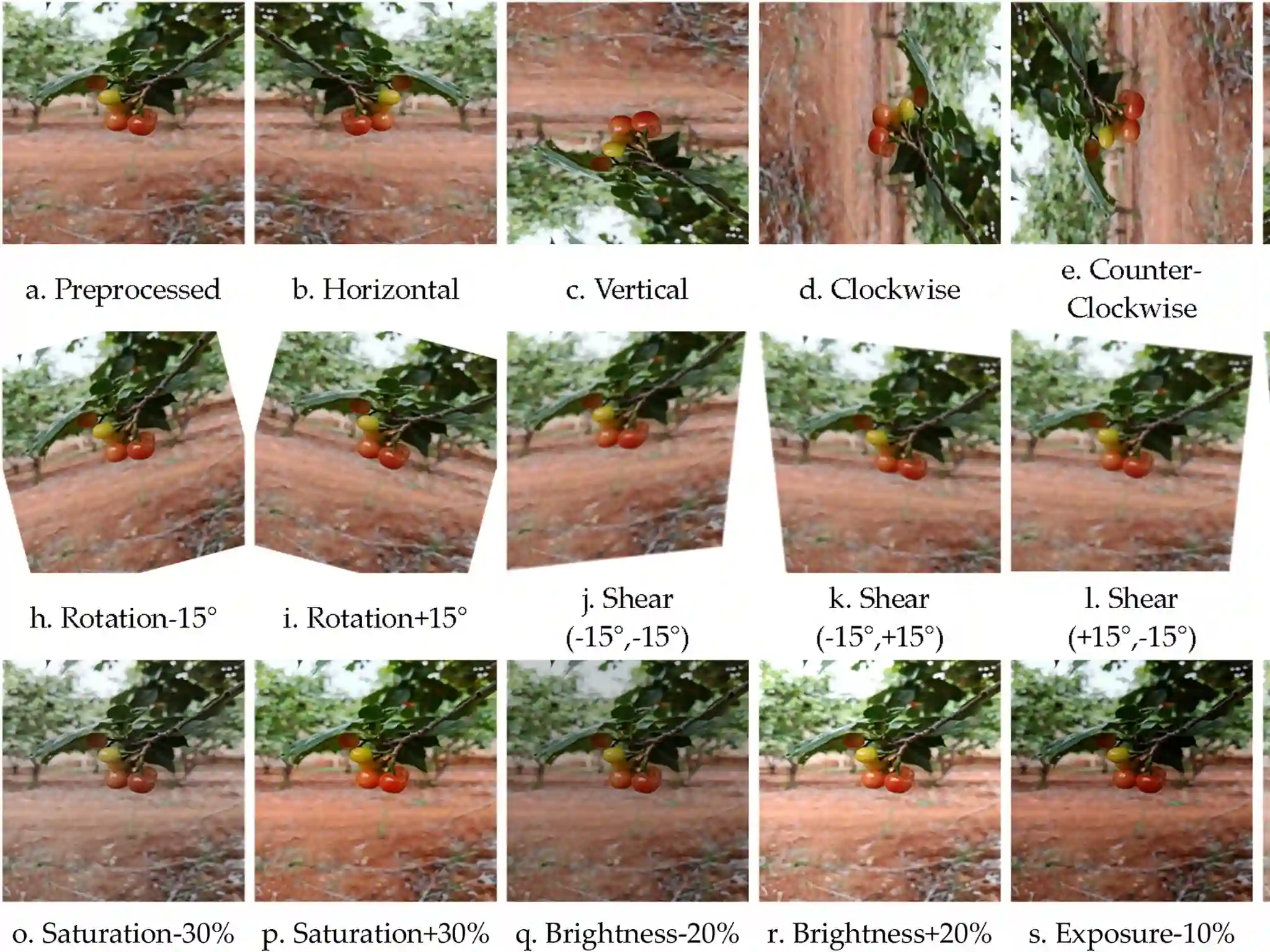

To address these challenges, a study conducted in Yunnan Province (China) proposes a new lightweight, real-time model named CMD-YOLO, based on an improved YOLO version 12 architecture.

The model introduces an adaptive detection head designed to optimize the number and scale of modules, thereby enhancing the perception of small and closely spaced objects.

In addition, an innovative channel fusion module based on depthwise convolutions enables more refined extraction of spatial features while reducing the impact of complex backgrounds.

Finally, the adoption of a new loss function allows more precise modeling of object shape and size, improving detection accuracy without increasing computational cost.

Results and applications

Experimental results demonstrate that CMD-YOLO significantly outperforms the baseline model, achieving notable improvements in accuracy, recall, and mean average precision, while drastically reducing the number of parameters.

These advantages make the proposed model particularly suitable for detecting dense, small cherry targets in complex environments, providing a solid foundation for practical applications in intelligent harvesting and precision agriculture.

Source: JOUR, T1 - CMD-YOLO: A lightweight model for cherry maturity detection targeting small object, Li, Meng, Ding, Xue, Wang, Jinliang, Smart Agricultural Technology, 12, 101513, 2025, 2025/12/01/2772-3755, https://doi.org/10.1016/j.atech.2025.101513

Image source: Meng Li et al., 2025

Melissa Venturi

University of Bologna (IT)

Cherry Times - All rights reserved